U. S. Curbs on AI Models Threaten AI Progress

Some?figurines?next?to?the?ChatGPT?logo.?(PHOTO:?VCG)

By?GONG?Qian

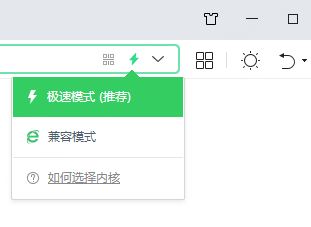

On?May?22,?U.S.?House Committee?on?Foreign Affairs?passed?a?bipartisan bill?that would make it easier for the Biden administration to restrict the export of proprietary or closed source AI models (the primary software of AI intelligent systems like ChatGPT) to China. It could have significant negative ripple effects for global AI international cooperation, thus hindering the scientific and technological progress and innovation of all of humankind. Also, both China and the?U.S. would be victims if the bill is passed.

Curbs on AI models are unfair to the global community. As a global public good, open-source AI models represent the collective contributions of researchers, scholars and developers from countries around the world, who adhere to the common principle of openness and sharing. However, the U.S.'s restriction is a typical act of hegemony and long-arm jurisdiction, which not only damages the interests of the global academic community, but also goes against the original intention of open source sharing, Yin Ximing, an associate researcher in the School of Management, Beijing Institute of Technology, and Tsinghua University Research Center for Technological Innovation, told Science and Technology Daily (S&T Daily). In the long term, it may diminish the international flow and exchange of scientific and technological expertise, affecting the spirit of global talent networks and collaborative endeavours.

With limited access to critical technologies and data, the pace of ground-breaking scientific and technological innovation in AI would slow down. Strengthening global cooperation on AI is an irreversible trend. The development of AI brings cross-border challenges, such as data security and ethical norms, requires the joint efforts and wisdom of the international community. The latest move by the U.S. is seen as deviating from the right track, Yang Yan, an associate professor focusing on the AI industry from an institute in Tianjin, told S&T Daily.

To deal with the common challenge, China proposed the Global Initiative for AI Governance in 2023, emphasizing the need for collaborative efforts. The country is also taking steps to foster global cooperation. For example, China and France issued a joint declaration on AI and global governance in early May.

Meanwhile, according to Yin, by restricting chip exports that prohibit cloud computing manufacturers from training AI large models for China, restricting sensitive data, and restricting the use of AI models, the U.S. is attempting to curb China's rapid advancement in AI by putting more barriers in data, algorithms and computing power — three core elements of AI.

The U.S. claims that such restrictive measures are out of concern for the application of AI in political elections, cybersecurity, and biological and chemical weapons. But unsurprisingly, the bill complements a series of measures implemented over the past few years in an effort to slow China's development of this cutting-edge technology. Clearly, its strategic intent is to preserve U.S. technological dominance and global influence.

Yin thinks that the U.S. is harming itself by placing restrictions on AI models. For one thing, it would increase compliance costs for U.S. tech companies, potentially reducing their willingness to engage in international cooperation. For another, restrictions may lead to less knowledge sharing and less transparency, as companies and individual researchers might be reluctant to disclose their research findings due to stringent reviews.

Clearly, China and the U.S. have common ground in the AI industry. Yang suggested that both sides should enhance official-level communication and expand people-to-people exchanges and cooperation on AI. During their first meeting of intergovernmental dialogue on AI in Geneva on May 14, both sides recognized that the development of AI technology presents both opportunities and risks. It is expected that China and the U.S., along with the global community, should jointly form a global AI governance framework, including standards and norms, reaching broad consensus.